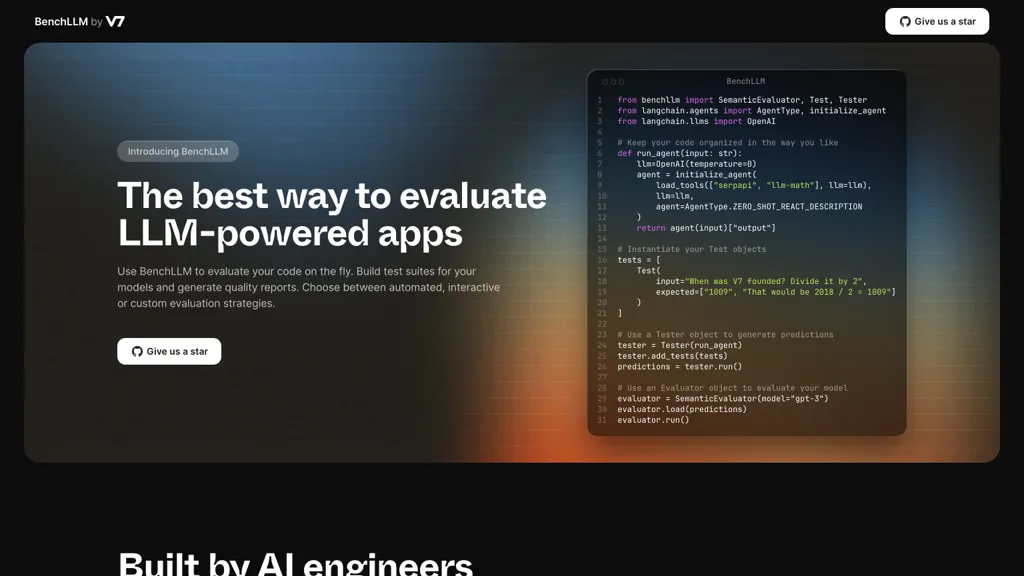

BenchLLM offers a smart AI solution that helps you test and evaluate applications powered by LLMs using various methods. With BenchLLM, you can easily choose how to test your apps—whether automatically, interactively, or with custom approaches—and effortlessly generate detailed reports.

You can seamlessly integrate semantic evaluators, tests, and testers, and also leverage tools like OpenAI, LangChain.agents, and LangChain.llms for evaluating your models. BenchLLM also simplifies your code setup and speeds up testing with its intuitive command-line interface (CLI) commands.

It's easy to track how your models perform in real-world situations and quickly catch any regressions. BenchLLM is also very versatile, working smoothly with OpenAI, LangChain, and API Box, which means it can handle a wide variety of LLM-powered applications.

Whether you're an AI professional or part of a team building AI products, BenchLLM is the perfect companion to ensure your models are accurate and reliable. Its user-friendly interface and various evaluation strategies let you easily define tests and generate insightful reports, helping you make well-informed decisions about your LLM-powered applications.